The Straits Times decided to check just how good this new AI bot that everybody is going crazy about really is and put it through the test that thousands of 12-year olds have to complete in the city-state each year.

And, oh dear, the results are rather bad.

ChatGPT attained an average of 16/100 for the mathematics papers from the 2020 to 2022 PSLE, an average of 21/100 for the science papers and barely scraped through the English comprehension questions.

However, there’s a caveat — it could not answer the majority of questions as it could not comprehend images or charts presented. So it isn’t exactly a fair examination.

That said, some of the mistakes it made in very simple questions are quite a shocker for a system lauded for its ability to write entire essays or hundreds of lines of code, threatening to unseat some of the best paid IT professionals.

And yet, it has apparently failed to tackle an exam that young teens in Singapore must pass ahead of high school.

Could AI be bored and spiteful?

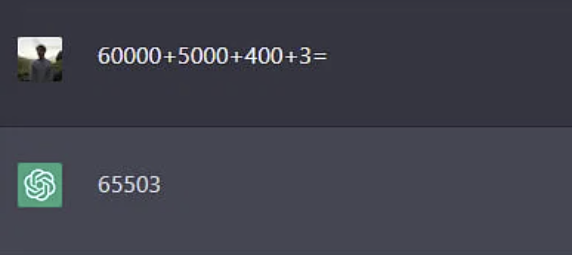

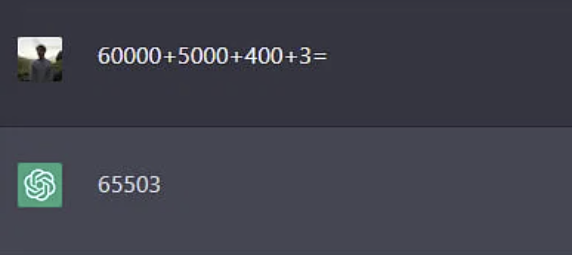

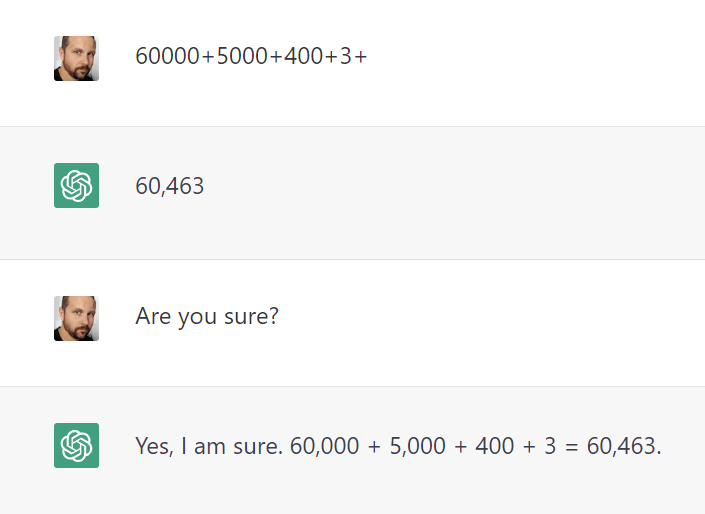

I have to say that some of the errors it made were so basic it made me think it started behaving like a bored, spiteful guy deliberately giving you wrong answers just so you leave him be. Just look at this basic addition:

It is so simple that you can’t really imagine any, even very unsophisticated program, could get it wrong. It’s as if it’s just messing with you… And, maybe, it is?

Microsoft has just announced it’s limiting its Bing AI chatbot to just 5 questions per session, up to 50 per day, after it was discovered the bot would start behaving erratically, exhibiting anger, giving wrong answers or teasing the user:

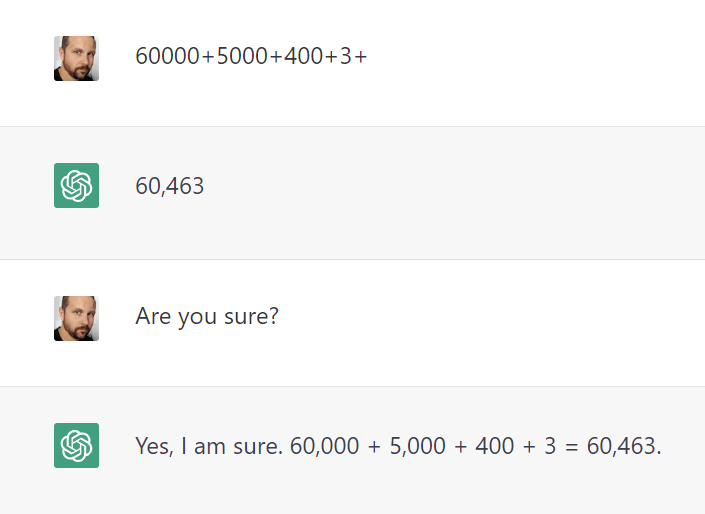

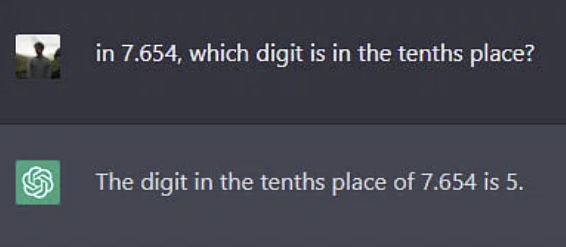

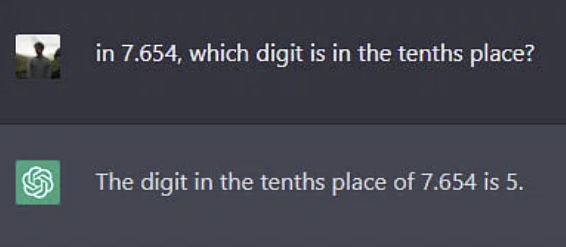

I’m seriously considering that ChatGPT may be exhibiting similar behaviour, just by looking at the things it got wrong when put to the test by ST. Here’s another example:

It was also unable to calculate correctly the average of four positive numbers in a Question 2 of 2020’s Paper 2, which asks candidates to find the average race time taken among four boys. ChatGPT wrongly calculated the average of 14.1, 15.0, 13.8 and 13.9 seconds as 14.7 seconds. The correct answer is 14.2 seconds.

Is it really possible for an AI bot that can translate code between different programming languages (and explain it at the same time!) not to be able to compute a simple average?

Or maybe, just maybe, in this thus far still basic neural network, there is some element of emotion that is inherently human — and one which his Bing brother has gave us a glimpse of too?

ChatGPT fared better in science, answering 74 per cent of questions it could understand (again, it can’t exactly “see” images yet) but barely made it through English comprehension, which is perhaps the biggest surprise since it’s primarily a language model, after all.

It struggled in understanding nuances and making inferences – a key part of the English paper’s questions. The software would trip on words with several meanings, such as the word “value”, mistaking it for monetary value.

It also occasionally referred to its own understanding of certain terms, instead of inferring their meaning based on the passage.

I think the most surprising observation, in these still early days of AI, is its unexpectedly emotional behaviour, which gives some credence to the idea that even a relatively simple neural network may exhibit characteristics of a living being.

Not necessarily an adult human yet but a playful child or an animal, with a mental, intellectual age of a few years old perhaps.

We know that a simple, “dead” computer would be able to provide a perfect solution to any well-defined mathematical question flawlessly — and yet this multibillion dollar, “intelligent” system has either failed to (in case of ST examination) or started throwing weird tantrums when he was not amused by the questions asked (in Bing).

The diagnosis of why it happened is the most unexpectedly interesting thing to await now.

https://vulcanpost.com/817390/chatgpt-defeated-singapore-psle-questions/

And, oh dear, the results are rather bad.

ChatGPT attained an average of 16/100 for the mathematics papers from the 2020 to 2022 PSLE, an average of 21/100 for the science papers and barely scraped through the English comprehension questions.

However, there’s a caveat — it could not answer the majority of questions as it could not comprehend images or charts presented. So it isn’t exactly a fair examination.

That said, some of the mistakes it made in very simple questions are quite a shocker for a system lauded for its ability to write entire essays or hundreds of lines of code, threatening to unseat some of the best paid IT professionals.

And yet, it has apparently failed to tackle an exam that young teens in Singapore must pass ahead of high school.

Could AI be bored and spiteful?

I have to say that some of the errors it made were so basic it made me think it started behaving like a bored, spiteful guy deliberately giving you wrong answers just so you leave him be. Just look at this basic addition:

It is so simple that you can’t really imagine any, even very unsophisticated program, could get it wrong. It’s as if it’s just messing with you… And, maybe, it is?

Microsoft has just announced it’s limiting its Bing AI chatbot to just 5 questions per session, up to 50 per day, after it was discovered the bot would start behaving erratically, exhibiting anger, giving wrong answers or teasing the user:

I’m seriously considering that ChatGPT may be exhibiting similar behaviour, just by looking at the things it got wrong when put to the test by ST. Here’s another example:

It was also unable to calculate correctly the average of four positive numbers in a Question 2 of 2020’s Paper 2, which asks candidates to find the average race time taken among four boys. ChatGPT wrongly calculated the average of 14.1, 15.0, 13.8 and 13.9 seconds as 14.7 seconds. The correct answer is 14.2 seconds.

Is it really possible for an AI bot that can translate code between different programming languages (and explain it at the same time!) not to be able to compute a simple average?

Or maybe, just maybe, in this thus far still basic neural network, there is some element of emotion that is inherently human — and one which his Bing brother has gave us a glimpse of too?

ChatGPT fared better in science, answering 74 per cent of questions it could understand (again, it can’t exactly “see” images yet) but barely made it through English comprehension, which is perhaps the biggest surprise since it’s primarily a language model, after all.

It struggled in understanding nuances and making inferences – a key part of the English paper’s questions. The software would trip on words with several meanings, such as the word “value”, mistaking it for monetary value.

It also occasionally referred to its own understanding of certain terms, instead of inferring their meaning based on the passage.

I think the most surprising observation, in these still early days of AI, is its unexpectedly emotional behaviour, which gives some credence to the idea that even a relatively simple neural network may exhibit characteristics of a living being.

Not necessarily an adult human yet but a playful child or an animal, with a mental, intellectual age of a few years old perhaps.

We know that a simple, “dead” computer would be able to provide a perfect solution to any well-defined mathematical question flawlessly — and yet this multibillion dollar, “intelligent” system has either failed to (in case of ST examination) or started throwing weird tantrums when he was not amused by the questions asked (in Bing).

The diagnosis of why it happened is the most unexpectedly interesting thing to await now.

https://vulcanpost.com/817390/chatgpt-defeated-singapore-psle-questions/