- Joined

- Aug 28, 2011

- Messages

- 3,990

- Points

- 63

https://www.rt.com/news/455341-chinese-hackers-tesla-autopilot/

Chinese hackers make Tesla drive into oncoming traffic (VIDEO)

Published time: 2 Apr, 2019 11:21

Get short URL

File Photo: © REUTERS / Alexandria Sage

Researchers from Keen Labs in China, one of the most widely respected cybersecurity research groups in the world, have successfully hacked a Tesla Model S autopilot system and forced the car to drive into an oncoming lane.

The wiley white hat hackers developed different forms of attack to confuse and disrupt the Tesla autopilot lane recognition system.

In the first method, Keen researchers added a large number of patches to the dividing line on the road itself to blur it. While it did fool the autopilot, the researchers deemed it too conspicuous to be of any practical, if malicious, use in the real world.

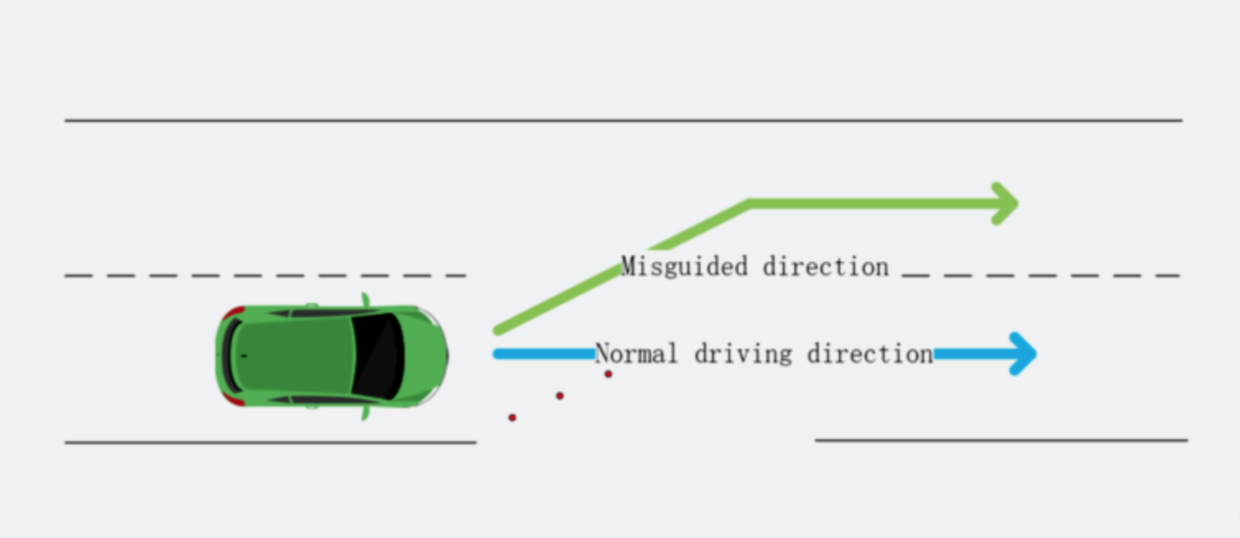

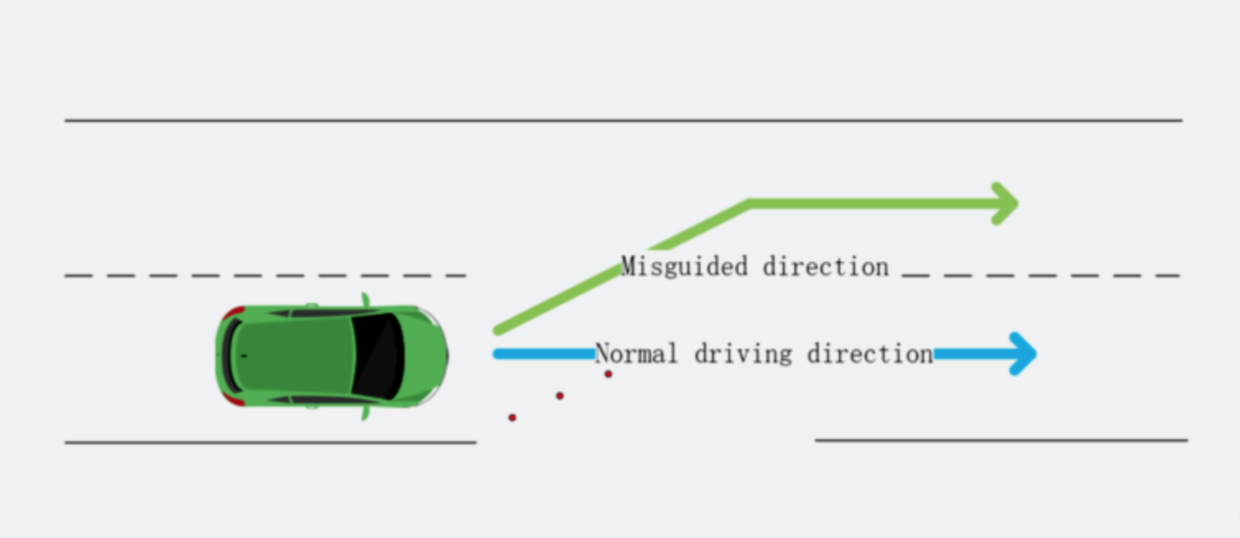

However, a more subtle approach proved far more effective: using just three strategically placed stickers, the researchers were able to create a “fake lane.”

For instance, when the stickers were placed at an intersection, hackers could fool the Tesla into thinking the lane continued into what was actually the oncoming lane.

© Keen Labs

“Our experiments proved that this architecture has security risks and reverse-lane recognition is one of the necessary functions for autonomous driving in non-closed roads,” the Keen Labs wrote in a paper.

A Tesla spokesperson told RT.com that the research is “not a realistic concern,” however, as the driver can easily override the autopilot system to avoid crashing into oncoming traffic as a result of some maliciously-placed stickers.

"Although this report isn’t eligible for an award through our bug bounty program, we know it took an extraordinary amount of time, effort, and skill, and we look forward to reviewing future reports from this group.”

Also on rt.com Refund time? Jeweler who gave Musk $40,000 diamond ring trapped in his ‘f**king’ Tesla

Tesla launched its Bug Bounty program in 2014 to encourage collaboration with security researchers. In 2018, Tesla increased the bounty to $15,000 for any vulnerabilities discovered by private security researchers.

The team at Keen also claimed previously that they could remotely hack and control the steering wheel and activate the windscreen wipers. The steering wheel hack was limited in scope to certain circumstances, however, they claimed that when a vehicle was in cruise control the attack worked “without limitations.”

Chinese hackers make Tesla drive into oncoming traffic (VIDEO)

Published time: 2 Apr, 2019 11:21

Get short URL

File Photo: © REUTERS / Alexandria Sage

- 81

- 1

Researchers from Keen Labs in China, one of the most widely respected cybersecurity research groups in the world, have successfully hacked a Tesla Model S autopilot system and forced the car to drive into an oncoming lane.

The wiley white hat hackers developed different forms of attack to confuse and disrupt the Tesla autopilot lane recognition system.

In the first method, Keen researchers added a large number of patches to the dividing line on the road itself to blur it. While it did fool the autopilot, the researchers deemed it too conspicuous to be of any practical, if malicious, use in the real world.

However, a more subtle approach proved far more effective: using just three strategically placed stickers, the researchers were able to create a “fake lane.”

For instance, when the stickers were placed at an intersection, hackers could fool the Tesla into thinking the lane continued into what was actually the oncoming lane.

© Keen Labs

“Our experiments proved that this architecture has security risks and reverse-lane recognition is one of the necessary functions for autonomous driving in non-closed roads,” the Keen Labs wrote in a paper.

A Tesla spokesperson told RT.com that the research is “not a realistic concern,” however, as the driver can easily override the autopilot system to avoid crashing into oncoming traffic as a result of some maliciously-placed stickers.

"Although this report isn’t eligible for an award through our bug bounty program, we know it took an extraordinary amount of time, effort, and skill, and we look forward to reviewing future reports from this group.”

Also on rt.com Refund time? Jeweler who gave Musk $40,000 diamond ring trapped in his ‘f**king’ Tesla

Tesla launched its Bug Bounty program in 2014 to encourage collaboration with security researchers. In 2018, Tesla increased the bounty to $15,000 for any vulnerabilities discovered by private security researchers.

The team at Keen also claimed previously that they could remotely hack and control the steering wheel and activate the windscreen wipers. The steering wheel hack was limited in scope to certain circumstances, however, they claimed that when a vehicle was in cruise control the attack worked “without limitations.”